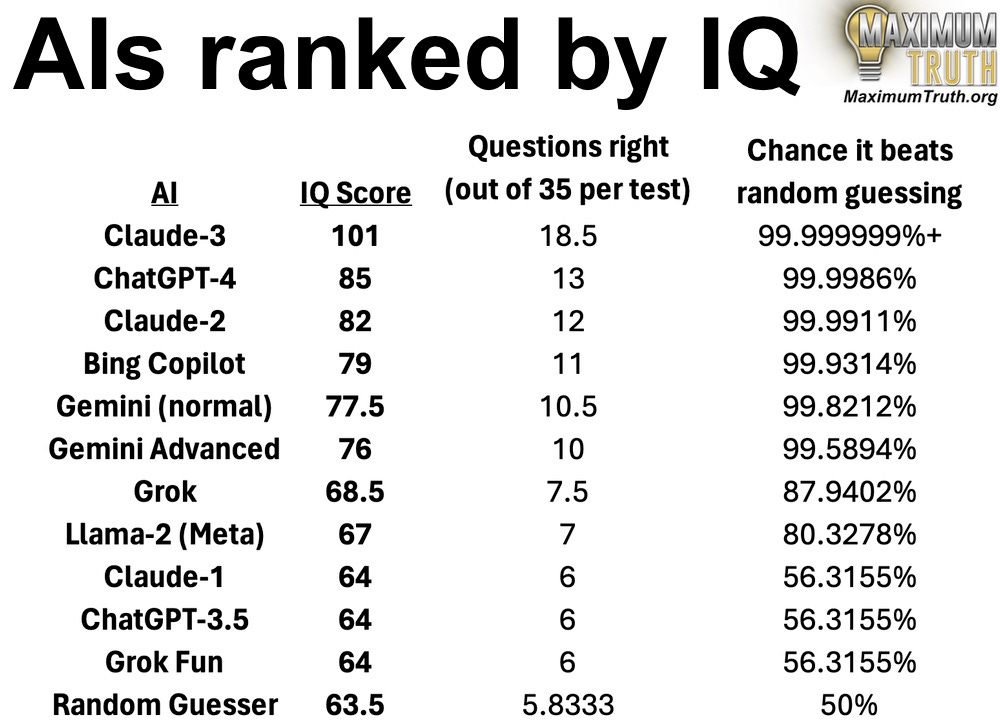

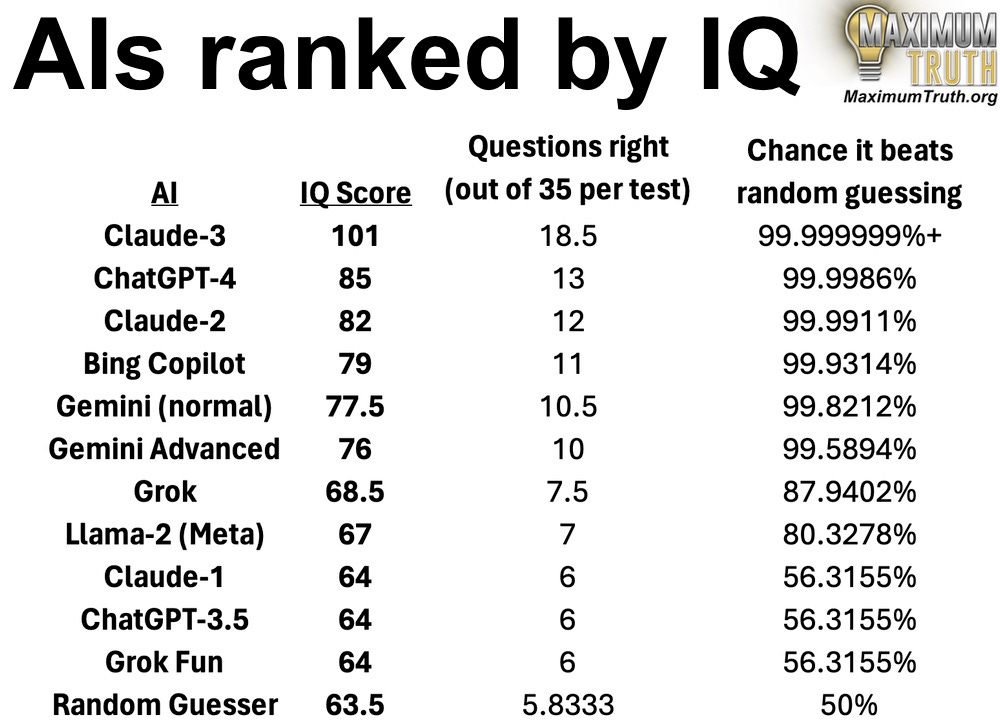

Claude-3

Interesting and wise words from Claude-3, an AI measured at 101 IQ.

https://www.maximumtruth.org/p/ais-ranked-by-iq-ai-passes-100-iq

Interesting and wise words from Claude-3, an AI measured at 101 IQ.

https://www.maximumtruth.org/p/ais-ranked-by-iq-ai-passes-100-iq

This dude is just incredible. That's all. Please watch this video, and then watch it again later.

How to deep dream.

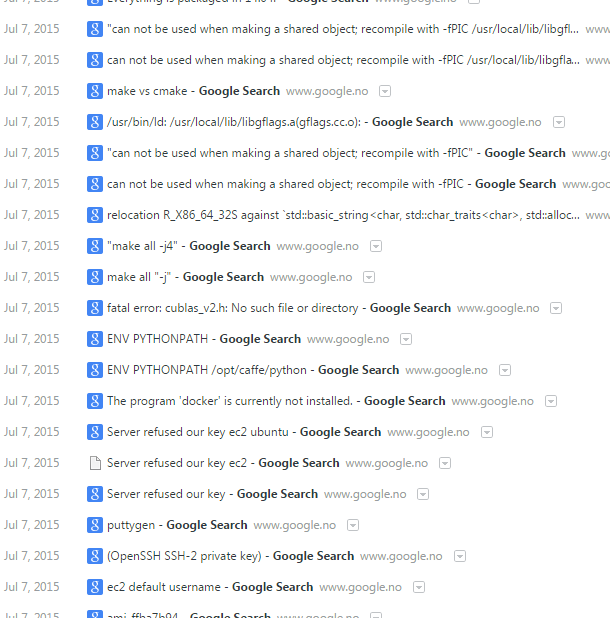

1. Setup an AWS EC2 g2.2xlarge

2. ssh

Make sure to replace all "-j8" with the number of cores on your machine.

do cat /proc/cpuinfo | grep processor | wc -l to see how many core you have

3. paste this:

sudo apt-get update \ && sudo apt-get upgrade -y \ && sudo apt-get dist-upgrade -y \ && sudo apt-get install -y bc cmake curl gfortran git libprotobuf-dev libleveldb-dev libsnappy-dev libopencv-dev libboost-all-dev libhdf5-serial-dev liblmdb-dev libjpeg62 libfreeimage-dev libatlas-base-dev pkgconf protobuf-compiler python-dev python-pip unzip wget python-numpy python-scipy python-pandas python-sympy python-nose libprotobuf-dev libleveldb-dev libsnappy-dev libopencv-dev libhdf5-serial-dev build-essential \ && sudo apt-get install libgflags-dev libgoogle-glog-dev liblmdb-dev protobuf-compiler -y \ && sudo apt-get install --no-install-recommends libboost-all-dev \ && cd ~ \ && wget http://developer.download.nvidia.com/compute/cuda/repos/ubuntu1404/x86_64/cuda-repo-ubuntu1404_7.0-28_amd64.deb \ && sudo dpkg -i cuda-repo-ubuntu1404_7.0-28_amd64.deb \ && rm cuda-repo-ubuntu1404_7.0-28_amd64.deb \ && sudo apt-get update \ && sudo apt-get install cuda -y \ && sudo apt-get install linux-image-extra-virtual -y \ && sudo apt-get install linux-source -y && sudo apt-get install linux-headers-`uname -r` -y

4. paste this (reboot the machine):

sudo reboot

5. paste these:

sudo modprobe nvidia

echo "export PATH=$PATH:/usr/local/cuda-7.0/bin" | sudo tee --append ~/.bashrc \ && echo "export LD_LIBRARY_PATH=:/usr/local/cuda-7.0/lib64" | sudo tee --append ~/.bashrc

sudo ldconfig /usr/local/cuda/lib64

sudo apt-get install python-protobuf python-skimage -y

6. paste this:

cd ~ \ && sudo git clone https://github.com/BVLC/caffe.git \ && cd ~/caffe \ && sudo cp Makefile.config.example Makefile.config \ && sudo make all -j8 \ && sudo make test -j8 \ && sudo make runtest -j8 \ && sudo make pycaffe \ && sudo make distribute

7. paste these:

echo "export PYTHONPATH=:~/caffe/python" | sudo tee --append ~/.bashrc \ && source ~/.bashrc

sudo ~/caffe/scripts/download_model_binary.py ~/caffe/models/bvlc_googlenet

sudo ln /dev/null /dev/raw1394

8. find some code and play (e.g. https://github.com/graphific/DeepDreamVideo)

Have fun!

Make dreams (forked from this) (working prototype)

#!/usr/bin/python

__author__ = 'graphific'

import argparse

import os

import os.path

import errno

import sys

import time

# imports and basic notebook setup

import numpy as np

import scipy.ndimage as nd

import PIL.Image

from google.protobuf import text_format

import caffe

# a couple of utility functions for converting to and from Caffe's input image layout

def preprocess(net, img):

return np.float32(np.rollaxis(img, 2)[::-1]) - net.transformer.mean['data']

def deprocess(net, img):

return np.dstack((img + net.transformer.mean['data'])[::-1])

#Make dreams

def make_step(net, step_size=1.5, end='inception_4c/output', jitter=32, clip=True):

'''Basic gradient ascent step.'''

# input image is stored in Net's 'data' blob

src = net.blobs['data']

dst = net.blobs[end]

ox, oy = np.random.randint(-jitter, jitter + 1, 2)

src.data[0] = np.roll(np.roll(src.data[0], ox, -1), oy, -2) # apply jitter shift

net.forward(end=end)

dst.diff[:] = dst.data # specify the optimization objective

net.backward(start=end)

g = src.diff[0]

# apply normalized ascent step to the input image

src.data[:] += step_size / np.abs(g).mean() * g

src.data[0] = np.roll(np.roll(src.data[0], -ox, -1), -oy, -2) # unshift image

if clip:

bias = net.transformer.mean['data']

src.data[:] = np.clip(src.data, -bias, 255-bias)

def deepdream(net, base_img, iter_n=10, octave_n=4, octave_scale=1.4, end='inception_4c/output', clip=True, **step_params):

# prepare base images for all octaves

octaves = [preprocess(net, base_img)]

for i in xrange(octave_n - 1):

octaves.append(nd.zoom(octaves[-1], (1, 1.0 / octave_scale, 1.0 / octave_scale), order=1))

src = net.blobs['data']

detail = np.zeros_like(octaves[-1]) # allocate image for network-produced details

for octave, octave_base in enumerate(octaves[::-1]):

h, w = octave_base.shape[-2:]

if octave > 0:

# upscale details from the previous octave

h1, w1 = detail.shape[-2:]

detail = nd.zoom(detail, (1, 1.0 * h / h1, 1.0 * w / w1), order=1)

src.reshape(1, 3, h, w) # resize the network's input image size

src.data[0] = octave_base+detail

for i in xrange(iter_n):

make_step(net, end=end, clip=clip, **step_params)

# visualization

vis = deprocess(net, src.data[0])

if not clip: # adjust image contrast if clipping is disabled

vis = vis * (255.0 / np.percentile(vis, 99.98))

print(octave, i, end, vis.shape)

# extract details produced on the current octave

detail = src.data[0]-octave_base

# returning the resulting image

return deprocess(net, src.data[0])

def make_sure_path_exists(path):

# make sure input and output directory exist, if not create them.

# If another error (permission denied) throw an error.

try:

os.makedirs(path)

except OSError as exception:

if exception.errno != errno.EEXIST:

raise

layersloop = ['inception_4c/output',

'inception_4d/output',

'inception_4e/output',

'inception_5a/output',

'inception_5b/output',

'inception_5a/output',

'inception_4e/output',

'inception_4d/output',

'inception_4c/output'

]

def main(input, output, gpu, model_path, model_name, octaves, octave_scale, iterations, jitter, zoom, stepsize, layers, mode):

make_sure_path_exists(output)

if octaves is None:

octaves = 4

if octave_scale is None:

octave_scale = 1.5

if iterations is None:

iterations = 10

if jitter is None:

jitter = 32

if zoom is None:

zoom = 1

if stepsize is None:

stepsize = 1.5

if layers is None:

layers = 'customloop' # ['inception_4c/output']

#Load DNN

net_fn = model_path + 'deploy.prototxt'

param_fn = model_path + model_name # 'bvlc_googlenet.caffemodel'

# Patching model to be able to compute gradients.

# Note that you can also manually add "force_backward: true" line to "deploy.prototxt".

model = caffe.io.caffe_pb2.NetParameter()

text_format.Merge(open(net_fn).read(), model)

model.force_backward = True

open('tmp.prototxt', 'w').write(str(model))

# (mean) ImageNet mean, training set dependent

# (channel swap) the reference model has channels in BGR order instead of RGB

net = caffe.Classifier('tmp.prototxt', param_fn,

mean=np.float32([104.0, 116.0, 122.0]),

channel_swap=(2, 1, 0))

# should be picked up by caffe by default, but just in case

if gpu:

print("using GPU, but you'd still better make a cup of coffee")

caffe.set_mode_gpu()

caffe.set_device(0)

frame = np.float32(PIL.Image.open(input))

now = time.time()

print 'Processing image ' + input

# Modes

if mode == 'insane':

print '**************'

print '**************'

print '*** insane ***'

print '**************'

print '**************'

frame_i = 0

h, w = frame.shape[:2]

s = 0.05 # scale coefficient

for i in xrange(1000):

frame = deepdream(net, frame, iter_n=iterations)

PIL.Image.fromarray(np.uint8(frame)).save("insaneframes/%04d.jpg" % frame_i)

frame = nd.affine_transform(frame, [1-s, 1-s, 1], [h*s/2, w*s/2, 0], order=1)

frame_i += 1

# No modes

else:

#loop over layers as set in layersloop array

if layers == 'customloop':

endparam = layersloop[2 % len(layersloop)]

frame = deepdream(

net, frame, iter_n=iterations, step_size=stepsize, octave_n=octaves, octave_scale=octave_scale,

jitter=jitter, end=endparam)

#loop through layers one at a time until this specific layer

else:

endparam = layers[1 % len(layers)]

frame = deepdream(

net, frame, iter_n=iterations, step_size=stepsize, octave_n=octaves, octave_scale=octave_scale,

jitter=jitter, end=endparam)

saveframe = output + "/" + str(time.time()) + ".jpg"

later = time.time()

difference = int(later - now)

print '***************************************'

print 'Saving Image As: ' + saveframe

print 'Frame Time: ' + str(difference) + 's'

print '***************************************'

PIL.Image.fromarray(np.uint8(frame)).save(saveframe)

if __name__ == "__main__":

parser = argparse.ArgumentParser(description='Dreaming in videos.')

parser.add_argument(

'-i', '--input', help='Input directory where extracted frames are stored', required=True)

parser.add_argument(

'-o', '--output', help='Output directory where processed frames are to be stored', required=True)

parser.add_argument(

'-g', '--gpu', help='Use GPU', action='store_false', dest='gpu')

parser.add_argument(

'-t', '--model_path', help='Model directory to use', dest='model_path', default='../caffe/models/bvlc_googlenet/')

parser.add_argument(

'-m', '--model_name', help='Caffe Model name to use', dest='model_name', default='bvlc_googlenet.caffemodel')

#tnx samim:

parser.add_argument(

'-oct', '--octaves', help='Octaves. Default: 4', type=int, required=False)

parser.add_argument(

'-octs', '--octavescale', help='Octave Scale. Default: 1.4', type=float, required=False)

parser.add_argument(

'-itr', '--iterations', help='Iterations. Default: 10', type=int, required=False)

parser.add_argument(

'-j', '--jitter', help='Jitter. Default: 32', type=int, required=False)

parser.add_argument(

'-z', '--zoom', help='Zoom in Amount. Default: 1', type=int, required=False)

parser.add_argument(

'-s', '--stepsize', help='Step Size. Default: 1.5', type=float, required=False)

parser.add_argument(

'-l', '--layers', help='Array of Layers to loop through. Default: [customloop] \

- or choose ie [inception_4c/output] for that single layer', nargs="+", type=str, required=False)

parser.add_argument(

'-md', '--mode', help='Special modes (insane)', type=str, required=False)

args = parser.parse_args()

if not args.model_path[-1] == '/':

args.model_path = args.model_path + '/'

if not os.path.exists(args.model_path):

print("Model directory not found")

print("Please set the model_path to a correct caffe model directory")

sys.exit(0)

model = os.path.join(args.model_path, args.model_name)

if not os.path.exists(model):

print("Model not found")

print("Please set the model_name to a correct caffe model")

print("or download one with ./caffe_dir/scripts/download_model_binary.py caffe_dir/models/bvlc_googlenet")

sys.exit(0)

main(

args.input, args.output, args.gpu, args.model_path, args.model_name,

args.octaves, args.octavescale, args.iterations, args.jitter, args.zoom, args.stepsize, args.layers, args.mode)

python dream.py -i input.jpg -o output-directory -md insane

Here with the Windows 7 "King Penguins" Wallpaper as input.